Shadow AI: The Unseen Threat Inside Financial Firms

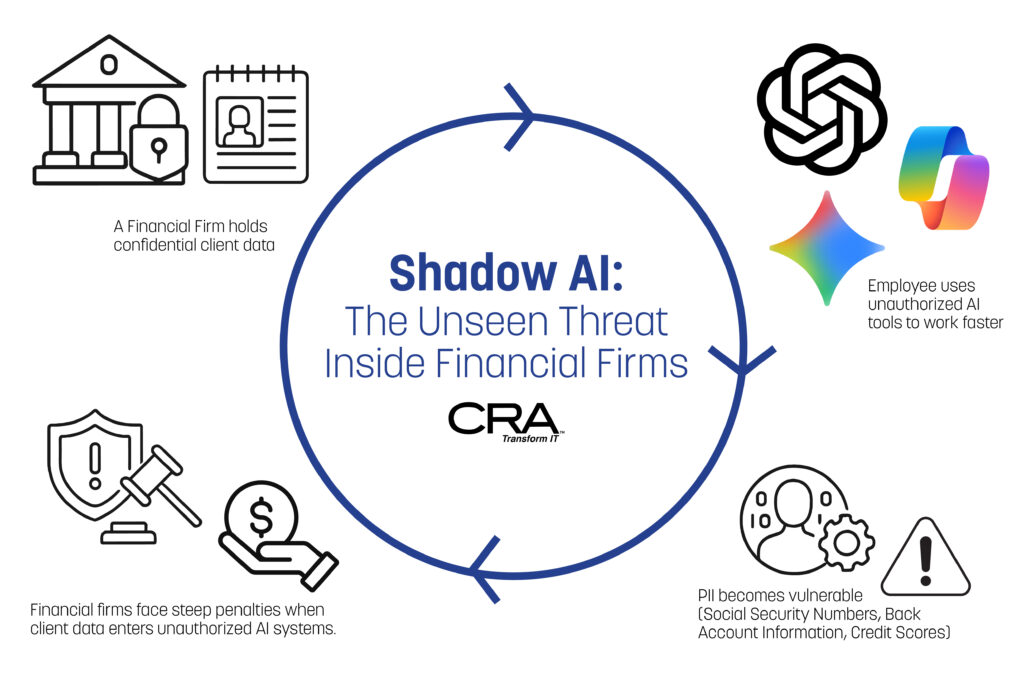

Your employees may already be exposing client data — without knowing it. A financial advisor copies a client's portfolio into ChatGPT to create an investment summary. An analyst pastes confidential earnings data into Bard to generate a quick report. These actions seem harmless, but they represent one of the biggest hidden threats facing financial firms today.

Shadow AI occurs when employees use unauthorized artificial intelligence tools like ChatGPT, Bard, or Copilot without IT approval or oversight, creating serious security and compliance risks. Unlike traditional software that requires installation, these AI tools are just a web browser away. Employees can access them instantly to solve problems, analyze data, or automate tasks. This convenience makes shadow AI extremely common and nearly invisible to IT departments.

The problem goes beyond simple policy violations. When financial professionals input sensitive client information into these tools, they may unknowingly share proprietary data with external AI systems. This practice creates compliance violations, data breaches, and regulatory penalties that can cost firms millions. Smart monitoring, clear governance policies, and proper data loss prevention can help firms control shadow AI while still letting employees benefit from AI technology.

What Is Shadow AI and How Does It Emerge?

Shadow AI happens when employees use AI tools without IT approval or oversight. These unauthorized AI systems create blind spots where sensitive data can leak or bypass security controls.

The Difference Between Shadow AI and Shadow IT

Shadow IT covers any technology used without IT department approval. This includes apps, tools, or services that employees adopt on their own.

Shadow AI is more specific. It focuses only on artificial intelligence tools like ChatGPT, Claude, or Copilot that operate without formal oversight.

Key differences include:

- Scope: Shadow IT covers all unauthorized technology, while shadow AI targets only AI applications

- Data handling: AI tools process and potentially store sensitive inputs in unpredictable ways

- Risk profile: Shadow AI introduces unique threats around data training, model outputs, and decision-making processes

Shadow AI creates deeper security concerns. Traditional shadow IT might expose login credentials or system access. Shadow AI can inadvertently feed confidential client data into external models that store or train on this information.

How Generative AI Tools Enter the Workplace

Generative AI adoption often starts with individual employees seeking faster ways to complete tasks. Most AI tools are free, browser-based, and require no installation.

Employees typically begin using these tools for simple tasks. They might ask ChatGPT to summarize documents or use Bard to draft emails.

The accessibility creates rapid adoption. Workers don't need procurement approval or IT setup to start using most AI platforms.

Common entry points include:

- Personal accounts on public AI platforms

- Browser extensions that add AI features to existing workflows

- SaaS applications that quietly roll out new AI capabilities

- API integrations built by developers without security review

Most employees don't realize they're creating security risks. They see these tools as productivity enhancers rather than potential data exposure points.

Unsanctioned Adoption Paths in Financial Firms

Financial employees often use AI tools to handle complex, data-heavy tasks. This creates multiple pathways for shadow AI to emerge within firms.

Portfolio analysis represents a major risk area. Advisors might paste client investment data into ChatGPT to generate summaries or recommendations without realizing the data could be stored externally.

Document processing creates another entry point. Employees use AI to review contracts, compliance reports, or research documents that contain sensitive client information.

Client communication drives shadow AI adoption. Staff members use generative tools to draft emails, proposals, or presentations that reference confidential financial details.

Development teams pose additional risks. They might integrate AI APIs into internal applications or build chatbots that access customer databases without proper security review.

The urgency of financial deadlines often pushes employees toward unauthorized solutions. When approved tools feel slow or limited, workers turn to readily available AI platforms to meet client demands.

Why Employees Turn to Unapproved AI Tools

Workers embrace unauthorized AI tools primarily for speed and productivity gains, often filling gaps left by absent company policies. Current data shows 59% of employees use unapproved AI tools regularly, with many sharing sensitive information through these platforms.

Productivity Gains and Everyday Use Cases

Financial professionals discover AI tools can cut routine tasks from hours to minutes. Research analysts use ChatGPT to summarize market reports quickly. Portfolio managers ask AI to explain complex investment strategies in simple terms.

Administrative work becomes much faster with AI help. Employees paste client emails into AI tools to draft responses. They use AI to create meeting summaries and write follow-up notes.

Common productivity uses include:

- Email drafting and responses

- Document summarization

- Data analysis explanations

- Report writing assistance

- Client presentation creation

The appeal is clear when AI-generated content saves 2-3 hours per day. Workers see immediate results without waiting for IT approval or training programs.

Many employees use these tools daily because they work. A compliance officer might use AI to review policy documents faster. An advisor could get help explaining complex financial concepts to clients.

Innovation Versus Oversight: The Temptation of Speed

Traditional IT processes move slowly while AI innovation happens daily. Employees want to try new AI features as soon as they launch. Company approval processes often take weeks or months.

Workers feel pressure to stay competitive and efficient. They see colleagues at other firms using AI tools openly. The fear of falling behind drives quick adoption decisions.

Speed versus control creates tension:

- New AI features launch weekly

- IT reviews take 30-90 days

- Competitors may gain advantages

- Client expectations increase

Employees often believe they can manage risks themselves. They think using AI for "simple tasks" poses no real danger. This confidence leads to sharing client data without proper safeguards.

The gap between AI capabilities and company policies grows wider each month. Workers choose productivity over compliance when oversight slows them down.

Lack of Official AI Policies

Most financial firms lack clear AI usage guidelines. Employees receive no training on approved tools or data protection rules. This policy vacuum encourages shadow AI adoption.

Studies show 57% of managers actually support unapproved AI use by their teams. Without official guidance, workers create their own rules. They decide what data feels "safe" to share with AI platforms.

Policy gaps include:

- No approved AI tool lists

- Missing data classification guidelines

- Unclear consequences for violations

- No AI security training programs

Companies that ban all AI use often see higher shadow adoption rates. Employees find ways around restrictions when legitimate options don't exist. Complete bans push AI use further underground.

Clear policies with approved alternatives reduce risky behavior. Workers need official channels for AI productivity gains. Without them, they will find unofficial ones.

Data at Risk: The Hidden Dangers of Shadow AI

Financial firms face serious risks when employees use unauthorized AI tools with sensitive client information, proprietary trading algorithms, and confidential business data. The consequences range from accidental data exposure to potential regulatory violations that can cost millions in fines.

Examples of Sensitive Data Exposure

Financial professionals regularly handle highly sensitive information that becomes vulnerable when entered into unauthorized AI platforms. Client portfolio data represents one of the most critical risks, as advisors might paste investment holdings, account balances, and personal financial information into ChatGPT or Copilot for analysis.

Personal identifiable information (PII) creates another major exposure point. This includes:

- Social Security numbers

- Bank account details

- Credit scores and financial history

- Investment preferences and risk profiles

Trading firms face additional risks with market-sensitive data. Employees might input upcoming merger details, earnings forecasts, or strategic investment plans into Bard or other AI tools without realizing these platforms may store and learn from this information.

Regulatory compliance data also becomes exposed when staff use shadow AI. Documents related to KYC procedures, anti-money laundering investigations, and audit findings could inadvertently leak through unauthorized AI interactions.

AI Tools and Proprietary Code Leakage

Financial institutions invest heavily in developing proprietary algorithms and trading systems that provide competitive advantages. When developers use ChatGPT or Copilot to debug code or optimize algorithms, they risk exposing these valuable assets.

Trading algorithms represent particularly sensitive intellectual property. A quantitative analyst might paste Python code for risk calculations or portfolio optimization into an AI tool, potentially revealing sophisticated mathematical models worth millions in development costs.

Risk management systems face similar vulnerabilities. Custom code that calculates Value at Risk, stress testing models, or compliance monitoring systems could leak through shadow AI usage.

Database schemas and API configurations also become vulnerable. Developers seeking help with database queries might accidentally expose table structures, relationship mappings, or security configurations that reveal system architecture.

Financial firms have reported instances where proprietary machine learning models used for credit scoring or fraud detection were partially exposed through unauthorized AI tool usage.

Real-World Consequences: Financial Sector Case Studies

The Samsung incident demonstrates how quickly corporate data can leak through shadow AI. An employee uploaded sensitive source code to ChatGPT for debugging, accidentally exposing proprietary algorithms to the platform's training data.

Regulatory penalties pose significant financial risks. Under regulations like GDPR and SOX, financial firms face fines up to 4% of annual revenue for data privacy violations. A single incident involving client data exposure through unauthorized AI tools could trigger investigations and substantial penalties.

Client trust erosion creates long-term business impact. When clients learn their financial information was processed by unauthorized AI platforms, they often move their accounts to competitors. Wealth management firms report losing high-net-worth clients after data security incidents.

Competitive disadvantage results from proprietary code exposure. Trading firms that lose algorithm secrets through shadow AI usage may see their market edge disappear as competitors potentially access similar strategies.

Insurance companies now scrutinize AI governance policies when underwriting cyber liability coverage. Firms without proper shadow AI controls face higher premiums or coverage exclusions for AI-related data breaches.

Compliance and Legal Pitfalls

Shadow AI creates serious legal exposure for financial firms across multiple regulatory frameworks. When employees use unauthorized AI tools with client data, they can trigger violations of GDPR, HIPAA, and CCPA without warning, while making audit compliance nearly impossible to maintain.

Regulatory Risks: GDPR, HIPAA, and CCPA Hazards

Financial firms face steep penalties when client data enters unauthorized AI systems. GDPR fines can reach €20 million or 4% of global revenue, whichever is higher.

HIPAA violations in healthcare-related financial services carry fines up to $1.5 million annually. CCPA adds another layer of risk for firms handling California residents' data.

The biggest danger comes from data transfers. When an employee pastes client information into ChatGPT or similar tools, that data leaves the firm's control perimeter. Most free AI tools process data on external servers in unknown locations.

This creates immediate compliance issues:

- Data residency violations under GDPR

- Unauthorized disclosure under HIPAA

- Consumer privacy breaches under CCPA

- Loss of trade secret protection for proprietary methods

Financial regulators treat these as serious infractions. The firm remains liable even when employees act without permission.

When AI Use Leads to Violations

Violations happen faster than most firms realize. A financial advisor copying client portfolio data into an AI tool creates instant exposure.

Common violation scenarios include:

- Investment advisors using AI to draft client communications with personal financial data

- Compliance teams feeding regulatory filings into unauthorized tools

- Analysts pasting market research containing client positions

- Support staff using AI chatbots to answer questions about specific accounts

Each action can trigger multiple regulatory violations. GDPR requires explicit consent for data processing. HIPAA demands business associate agreements with third parties handling health data.

The legal risk compounds because firms often discover shadow AI use months later. By then, the data exposure is complete and irreversible.

Studies show 79% of IT leaders report negative outcomes from shadow AI use. These include data leaks and compliance failures that create lasting legal liability.

Audit and Traceability Challenges

Shadow AI makes compliance audits extremely difficult. Regulators expect firms to track how client data gets processed and stored.

Unauthorized AI tools create audit blind spots. Firms cannot produce required documentation about:

- Where sensitive data was sent

- How long external systems retained it

- What security controls protected it

- Whether data was deleted properly

Traditional audit trails break down when employees use external AI services. Most consumer AI tools don't provide enterprise-grade logging or data lineage tracking.

This creates specific compliance problems during regulatory examinations. Auditors expect detailed records of data handling practices.

Financial firms must demonstrate they maintain appropriate safeguards for client information. Shadow AI makes this impossible because the firm has no visibility into how external AI systems process their data.

The lack of traceability becomes especially problematic during data breach investigations. Regulators require comprehensive incident reports that shadow AI usage makes difficult or impossible to complete.

Cybersecurity Vulnerabilities Introduced by Shadow AI

Shadow AI creates multiple attack pathways that traditional security tools cannot detect. These vulnerabilities stem from uncontrolled access to external AI platforms and the unpredictable nature of AI-generated outputs.

Open-Source AI and External Threats

Open-source AI models present significant security gaps for financial firms. Unlike enterprise-grade solutions, these tools lack proper access controls and data encryption.

Many employees download AI applications directly to their devices. This bypasses corporate firewalls and security monitoring systems. Sensitive client data flows freely to external servers without IT oversight.

Popular AI platforms store conversation history on third-party clouds. Financial advisors using these tools inadvertently create permanent records of confidential information. Cybercriminals can exploit compromised AI platforms to access this stored data.

Key vulnerabilities include:

- No data residency controls

- Weak authentication protocols

- Limited audit trails

- Shared computational resources

AI-Generated Content Risks

AI tools can produce content that contains hidden security threats. Financial firms face unique risks when employees rely on AI-generated documents or code snippets.

Malicious prompts can trick AI systems into revealing sensitive training data. Attackers use prompt injection techniques to extract confidential information from previous user sessions.

AI-generated financial reports may contain fabricated data or recommendations. These errors can lead to compliance violations and regulatory penalties. Employees often trust AI outputs without proper verification.

Some AI tools insert tracking pixels or malicious links into generated content. When shared with clients, these documents become attack vectors targeting external networks.

Attack Surfaces and Cyber Exploits

Shadow AI expands the attack surface beyond traditional IT infrastructure. Each unauthorized AI tool creates new entry points for cybercriminals.

Browser-based AI applications run outside corporate security perimeters. Malware can exploit these connections to establish persistent access to internal networks. Standard endpoint protection cannot monitor AI tool communications.

API integrations with unauthorized AI services create hidden data flows. Attackers can intercept these connections to harvest sensitive information in real-time.

Common exploit methods:

- Session hijacking through AI platforms

- Data poisoning attacks on shared models

- Man-in-the-middle attacks on API calls

- Credential harvesting from AI login pages

Financial firms must monitor all AI-related network traffic to identify these threats effectively.

Best Practices: Managing Shadow AI and Protecting Your Firm

Financial firms need three key approaches to control shadow AI: clear policies with governance structures, monitoring tools that prevent data loss, and employee education programs that change workplace culture around AI use.

AI Usage Policies and Governance Structures

Financial firms must create written AI policies that specify which tools employees can use. These policies should list approved AI platforms and ban unauthorized tools like personal ChatGPT accounts.

Policy Requirements:

- Role-based access controls for different job functions

- Data classification rules for client information

- Approval processes for new AI tools

- Regular policy reviews and updates

Governance structures need clear ownership. IT teams should manage technical controls while compliance teams handle regulatory requirements. Security teams must monitor for policy violations.

Firms should establish AI review committees with representatives from IT, compliance, and business units. These committees evaluate new AI requests and update policies as technology changes.

Key Governance Elements:

- Executive sponsorship for AI initiatives

- Clear escalation procedures for violations

- Regular audits of AI tool usage

- Integration with existing risk management frameworks

Monitoring Tools and Data Loss Prevention

DLP systems can detect when employees copy sensitive data into AI platforms. These tools scan for client account numbers, social security numbers, and other protected information before it leaves the firm's network.

Network monitoring solutions track which AI websites employees visit. They can block access to unauthorized platforms while allowing approved tools to function normally.

Essential Monitoring Capabilities:

- Real-time detection of data uploads to AI platforms

- Website categorization and blocking

- User behavior analytics

- Automated alerts for policy violations

Cloud access security brokers provide additional protection by monitoring all web traffic. They can identify shadow AI usage patterns and enforce data protection rules across all devices.

Firms should implement endpoint protection that monitors clipboard activity and file uploads. This catches attempts to paste client data into web-based AI tools.

Culture Change and Employee Education

Training programs must explain why shadow AI creates risks for the firm and its clients. Employees need to understand that using personal AI accounts with client data violates privacy regulations and firm policies.

Regular workshops should demonstrate approved AI tools and show how they protect sensitive information. This gives employees productive alternatives to shadow AI solutions.

Training Components:

- Monthly AI security awareness sessions

- Real-world examples of data breaches from shadow AI

- Hands-on practice with approved tools

- Clear consequences for policy violations

Communication campaigns can highlight the benefits of approved AI platforms. When employees see that sanctioned tools meet their needs, they are less likely to seek unauthorized alternatives.

Managers should model proper AI usage and report shadow AI when they discover it. This creates accountability throughout the organization.

Next Steps: Book an AI Governance Assessment

Financial firms need immediate action to address shadow AI risks. An AI governance assessment provides clear visibility into current AI usage across the organization.

The assessment process takes just 2-3 weeks. MSP experts will scan networks and systems to identify all AI tools in use. They will map data flows and flag potential compliance violations.

What the assessment includes:

- Complete inventory of AI tools and platforms

- Risk analysis for each identified system

- Data flow mapping to external AI services

- Compliance gap analysis for financial regulations

- Employee usage patterns and behaviors

Key benefits for financial firms:

- Immediate visibility into shadow AI activities

- Risk prioritization based on data sensitivity

- Actionable roadmap for AI governance policies

- Compliance alignment with industry standards

The assessment team will interview key stakeholders across departments. They will review existing policies and technical controls. All findings are documented in a comprehensive report.

The final deliverable includes:

- Executive summary of findings

- Detailed risk assessment matrix

- Policy recommendations and templates

- Technical implementation roadmap

- Budget estimates for remediation

Most firms discover 3-5 times more AI usage than expected. The assessment helps transform shadow AI from a hidden threat into a managed business tool.

Contact your MSP today to schedule an AI governance assessment. Early action prevents data breaches and regulatory violations before they occur.

Frequently Asked Questions

Shadow AI presents complex challenges for financial firms as employees use unauthorized AI tools with sensitive client data. Understanding the specific risks and implementing proper controls requires clear answers to common security and compliance questions.

What are the risks associated with employees using AI tools like ChatGPT without proper oversight?

Financial firms face significant data exposure when employees use unauthorized AI tools. Client portfolios, personal financial information, and proprietary trading strategies can be transmitted to third-party AI platforms without encryption or proper security controls.

These AI platforms often store user inputs to improve their models. This means sensitive client data could remain on external servers indefinitely. The firm loses control over where this information goes and who might access it.

Compliance violations represent another major risk. Financial firms must follow strict data protection rules like GDPR and industry regulations. Using unauthorized AI tools can create audit trail gaps and regulatory reporting problems.

Biased or inaccurate AI outputs can lead to poor investment advice. Without proper validation processes, employees might rely on flawed AI recommendations when making client decisions.

How can financial firms detect and manage unauthorized use of AI technologies?

Network monitoring tools can identify traffic patterns to known AI platforms. Firms should track web requests to sites like ChatGPT, Claude, and Microsoft Copilot to spot unauthorized usage.

Data Loss Prevention (DLP) systems can scan outbound communications for sensitive data patterns. These tools flag when financial information, social security numbers, or client names are sent to unauthorized destinations.

Employee activity monitoring software tracks application usage and file access patterns. This helps identify when staff members copy client data before visiting AI websites.

Regular security audits should include checks for unauthorized software installations. Many AI tools work through browser extensions or desktop applications that bypass traditional IT controls.

User behavior analytics can detect unusual patterns like large data downloads followed by external web activity. These patterns often indicate shadow AI usage.

What measures can organizations implement to protect client data from being exposed by shadow AI?

Endpoint protection software should block access to unauthorized AI platforms. Firms can create blacklists of known AI websites and prevent employees from reaching these destinations.

Data classification systems should tag sensitive client information. This makes it easier for DLP tools to identify when protected data is being sent to unauthorized locations.

Network segmentation can isolate client data systems from internet access. Critical financial databases should operate on separate networks with limited external connectivity.

Employee training programs must address AI usage policies. Staff need clear guidelines about which AI tools are approved and how to handle client data properly.

Regular data access audits help identify who has access to sensitive information. Firms should limit client data access to only those employees who need it for their specific roles.

Encryption of sensitive data at rest and in transit provides an additional protection layer. Even if data is accidentally shared with AI platforms, encryption makes it much harder to misuse.

Why is the inclusion of shadow AI in an organization's AI model catalog critical for security?

An AI model catalog provides visibility into all AI tools used within the organization. This includes both approved applications and unauthorized shadow AI tools that employees are using.

Security teams need complete inventories to assess risk properly. Unknown AI tools create blind spots in security monitoring and compliance reporting.

The catalog helps establish proper governance frameworks. Each AI tool can be evaluated for security controls, data handling practices, and regulatory compliance requirements.

Risk assessment becomes more accurate with complete AI visibility. Security teams can prioritize threats and allocate resources based on actual usage patterns rather than assumptions.

Compliance auditors expect organizations to maintain complete technology inventories. Missing AI tools from official catalogs can result in regulatory violations and penalties.

How can a Managed Service Provider (MSP) assist firms in establishing effective AI usage policies?

MSPs provide specialized expertise in AI governance frameworks. They understand financial industry regulations and can develop policies that meet specific compliance requirements.

Technical implementation support helps firms deploy monitoring tools and security controls. MSPs can configure DLP systems, network monitoring, and endpoint protection specifically for AI-related risks.

Policy development includes creating clear guidelines for approved AI tools. MSPs help define acceptable use cases, data handling requirements, and approval processes for new AI technologies.

Staff training programs can be developed and delivered by MSPs. These cover AI security awareness, proper usage procedures, and incident reporting requirements.

Ongoing monitoring services ensure policies remain effective. MSPs can provide 24/7 surveillance of AI-related security events and compliance violations.

What are best practices for creating a data loss prevention strategy that addresses the use of unsanctioned AI?

Content inspection rules should identify financial data patterns. DLP systems need to recognize account numbers, social security numbers, portfolio details, and other sensitive client information.

Real-time blocking capabilities prevent data transmission to unauthorized AI platforms. When sensitive data is detected in outbound communications, the system should stop the transfer immediately.

User education remains essential for DLP effectiveness. Employees need to understand why certain actions trigger security alerts and how to handle client data appropriately.

Regular policy updates ensure DLP rules stay current with new AI platforms. Security teams should monitor emerging AI tools and update blocking rules accordingly.

Incident response procedures must address AI-related data exposure. Firms need clear steps for investigating potential breaches and notifying affected clients when necessary.

Integration with existing security tools improves overall protection. DLP systems should work together with endpoint protection, network monitoring, and user behavior analytics for comprehensive coverage.